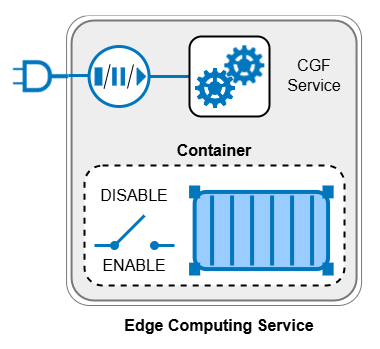

Edge Computing is a new approach to increase edge security on the CloudGen firewall by eliminating the need for additional infrastructure. For this, Edge Computing on the CGF allows you to run applications directly on the firewall while keeping communication latencies at a minimum and maintaining the overall security provided by the firewall.

On the implementation level, the Barracuda CloudGen Edge Computing feature provides the option of running container technology to a certain extent on the firewall. For this, Edge Computing supports the Open Container Initiative (OCI) standard by allowing organizations to run almost any OCI-compliant application. As an example, a container with its appropriate software could be used to collect and analyze the CGF’s real-time network traffic and send the results to another target for post-processing.

The firewall handles the Edge Computing environment as a separate security zone, enabling granular control over traffic flows while leveraging Next-Gen security technologies.

Edge Computing can be used on standalone and managed firewalls.

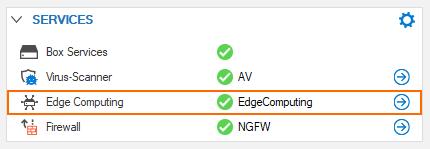

Edge Computing Service

Edge Computing is run in a protected environment on the CGF (sandbox) and nested at the CGF’s service level. The core functionality of Edge computing is container technology, which basically allows to run multiple sandboxed instances besides other containers on a common platform.

However, due to resource limitations, the CloudGen firewall permits to execute only a single container. To provide the user with the CGF’s familiar user experience, the container is wrapped into a CloudGen service context with its service configuration fields.

Configuration and UI-Staging

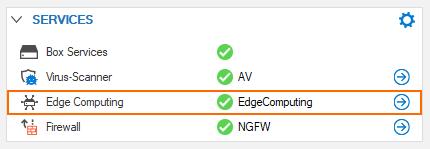

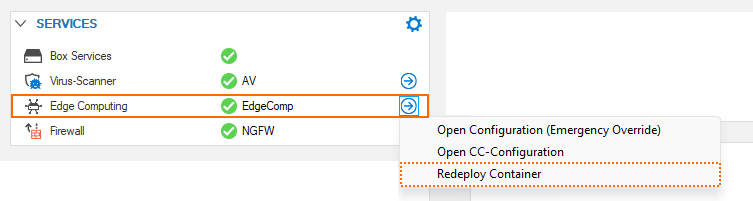

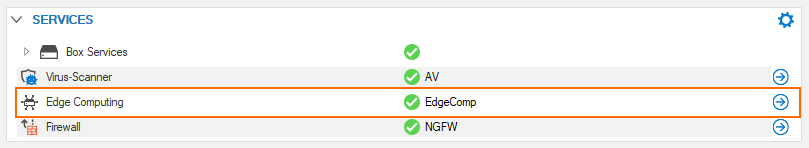

Edge computing will show up at different places in the user interface in Barracuda Firewall Admin:

DASHBOARD

Clicking the blue arrow on the right side will show the option where you can redeploy the container if necessary.

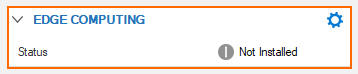

EDGE COMPUTING

The Edge Computing dashboard element can have two appearances.

As long as Edge Computing is disabled, the dashboard will show this element:

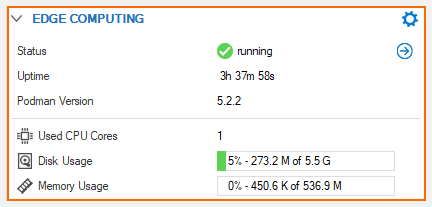

After enabling Edge Computing, the dashboard shows this element:

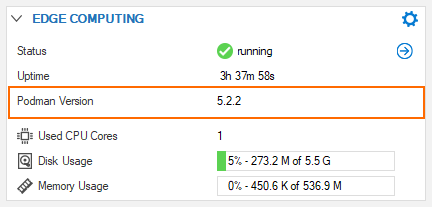

The edge computing element shows:

Status – If the service is running

Uptime – How long the service is running since its last start.

User CPU Cores – How many cores are currently used for edge computing.

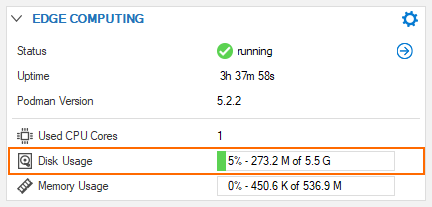

Disk Usage – How much space the container consumes on the hard disk.

Memory Usage – How much memory the service uses during its operation.

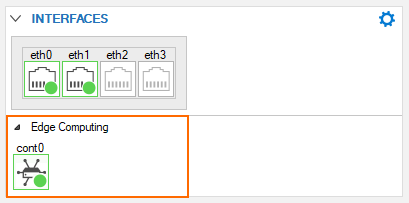

Interfaces

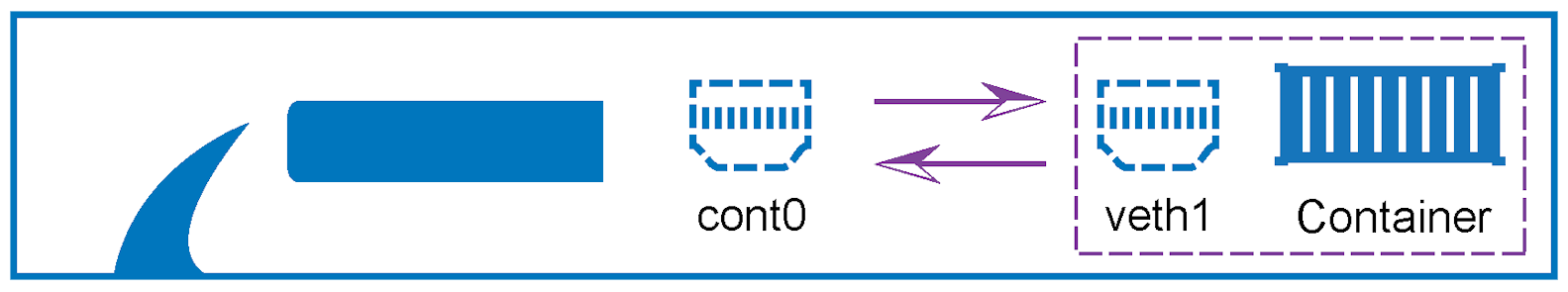

The CloudGen firewall uses two interfaces to communicate with the container. In the INTERFACES element, however, only the CGF-sided interface is displayed because the second interface resides inside of the container.

CONTROL

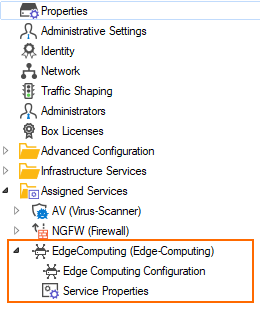

Configuration Tree

Edge Computing is available as a firewall service and can be configured as part of the Assigned Services configuration node in the configuration tree:

Container Control

Because containers adhere to the Open Container Initiative (OCI) standards, the CloudGen firewall holds and communicates with a copy of Podman for managing containers on the firewall. While Podman forwards commands from the firewall to the container, it also forwards output from the container’s running process to the CGF which in turn writes them to the appropriate logs.

The entry for Podman is visible as soon as Edge Computing is enabled in the EDGE COMPUTING element:

Availability, Usage, and Limitations

Edge Computing can be run on all CloudGen firewall models (both boxes and virtual appliances). The only limitation is given by the firewall’s resources (CPUs, hard disk, memory). To ensure that the CloudGen firewall still operates according to its expected performance limits, the maximum number of supported CPUs provided for Edge Computing is 1, and the working memory limit is 512 MB. The provided disk space for Edge Computing is limited to 10% of the /phion0 partition size, which is the main operative partition of the firewall.

Container Storage, Access, Security, and Container Network

For protecting the CGF, it is not possible to gain root access from the container to the firewall.

A container is stored on the firewall in the directory /var/phion/preserve/container/edgecomputing.

To exchange information between the CGF and the container, these two instances can communicate on the following levels:

Initial Data

Information can be passed upon startup of a container in environment variables from the CGF to the container. Podman serves as the intermediator for controlling the container’s behavior.

Continuous Data

Network traffic can be routed between the CGF and the container by configuring a transfer network with two bridging interfaces. The interface name on the CGF side is cont0, and veth1 (=virtual eth1) on the container side.

You can configure this transfer network at CONFIGURATION > Config Tree > Box > Assigned Services > Edge Computing > Network Setup, section Container Network.

The container network configuration is preset with the following default values:

Transfer Networkmask: 30 Bit

Transfer IP address Barracuda Cloud…: 172.16.30.1

This IP address serves as the gateway to the CGF.

Transfer IP address Container: 172.16.30.2

Access Rules

Two default access rules that come with firmware 10.0 control the flow between the container and the attached network:

Host Firewall

The host firewall ruleset in firmware 10.0 contains preset access rules which are enabled by default:

Inbound Access Rule

Select Inbound on top of the access rule list:

Outbound Access Rule

Select Outbound on top of the access rule list:

Forwarding Firewall

The forwarding firewall ruleset in firmware 10.0 contains a preset access rule which is disabled by default:

File Share

To pass execution relevant data to the container, the Edge Computing service provides a persistent storage on the CGF that resides in a related directory even if the container is disabled. For more information, see file-sharing.

Configuring a Container

Registry

For using a container, you must download it from a source that provides the container image. This location is called the Registry.

Here are some examples as commonly known places:

docker.io/library/alpine

docker.io/weibeld/ubuntu-networking

Tags

According to the OCI standard, the newest container in a sequence can be identified by the appendix ‘:latest’. Practically, you don’t have to care about this extension. However, if the container provider publishes multiple versions for a container, he can explicitly append a tag to the container name for distinguishing the different container versions, i.e. example_container:V1.0.0.

In this example, the version number V1.0.0 is the tag. The template for using a tag is: <containername>:<tag>.

If you want to ensure that the newest container version is downloaded, you can append the tag ‘:latest’. Omitting the tag will also default to downloading the newest version.

Executing Start Commands

If you want to execute specific commands during the activation of the container, you can configure a start command to be executed by the container’s shell, e.g., start_my_own_command.sh .

Disk Space Quota

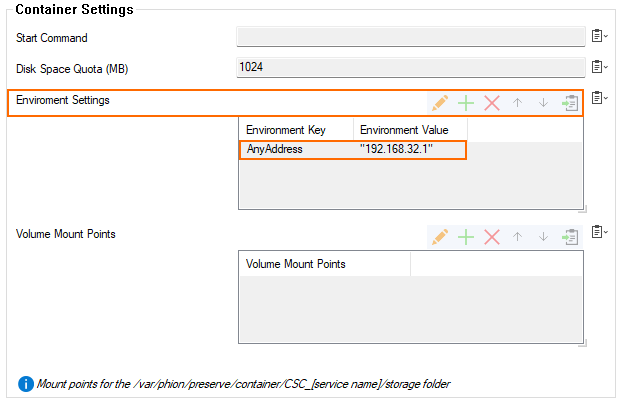

By default, the CloudGen firewall provides a maximum of 10% of the internal disk space to be used by a container. Because this could be too much in some cases, you can limit the maximum amount of memory to be used by the container by specifying it according to your own requirements, in Megabytes. The following screenshot shows a useage of ~5% configured disk space.

Environment Variables

If you are creating a container and you must pass initial data to the container, you can use environment variables. Data is passed by a key-value pair which must be configured as part of a specific container in the Environment Settings list of the Container Settings section.

You can store any value in such an environment variable as long as Linux standards are satisfied. As an example, you can pass a certificate to a container by storing the content of a .pem-file in an environment variable.

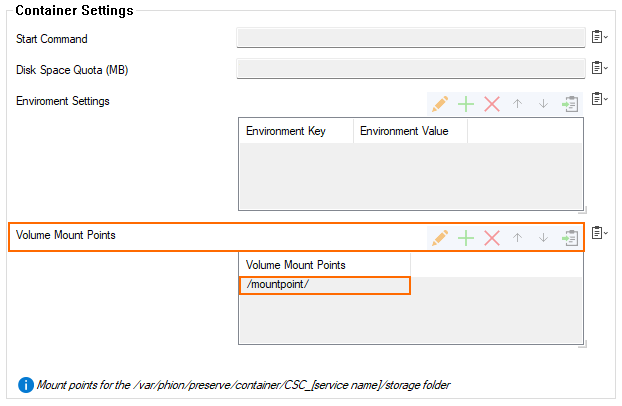

File Sharing

File-shares for Edge Computing are intended to pass operation relevant data between the CGF and the container. Such a file-share is a storage on the CGF side that persists even reboots of the firewall and therefore is suited for passing data which the container requires for its operation (i.e., configuration files, databases, …).

On the CGF side, the folder /var/phion/preserve/container/CSC_<containername>/storage is used as the CGF-sided file-share point.

To access the content from the container inside of the directory ‘storage’, you must configure a mountpoint that will be created directly related to the container’s root directory, i.e. ‘myContainerFileShare’:

CGF-Sided File-Share Path | Container Mountpoint | Container-Sided File-Share Path |

|---|---|---|

|

|

|

You can configure any number of mount points for a container in the section Container Setting of the Edge Computing Configuration node:

Traffic Mirroring

Traffic mirroring is a feature that intermediates between the CGF and the container in such a way that the CGF mirrors the traffic to the container. Depending on specific configuration switches, mirrored traffic can be encapsulated in UDP packets or not.

With encapsulation, the UDP packets refer with the Box IP to the source IP and with the container IP to the destination IP. The default UDP source and destination port is 6000, the default destination IP is 172.16.30.2.

By enabling traffic mirroring, you can overwrite these default values according to your requirements.

Traffic mirroring is disabled by default and can only be used in Firewall Admin’s Advanced Mode.

Starting a Container

To turn a container operative, you must basically perform two steps:

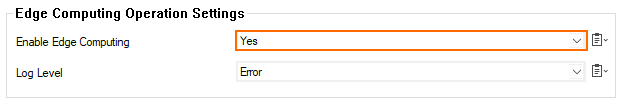

In the Edge Computing Operation Settings configuration section of the container service, besides all other configuration fields, you must enable the container. Thereby, the newest available image of the container will be downloaded from the configured registry, and the container will be enabled to run.

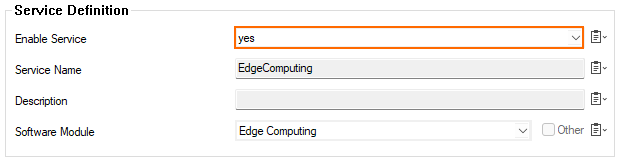

In the property fields of the Edge Computing service at CONFIGURATION > Box > Services > Edge Computing > Service Properties, you must activate the service on the level of a CloudGen service.

Configuring a Single Container

The combination of these two configuration options leads to different behaviors of a container.

Therefore, you must consider:

Enabling Edge Computing in the container configuration causes the container image to be downloaded from the configured registry.

After a successful download, the container is immediately started.

When you disable the container, its image is deleted from the hard disk to save storage, and the configured version of the container image will be loaded as soon as you re-enable it.

According to the basic concept, a CGF service is generically also an active instance, which in the context of Edge Computing wraps around the container, which in turn is running autonomously. Depending on the state of the configuration switches of these two instances, the encapsulation of an autonomous container within a CGF service can lead to specific operative states:

SINGLE Edge Computing Service | Container IS NOT Configured | Container is Configured and Inactive | Container is Configured and Activated |

|---|---|---|---|

CGF Service is Inactive | The service will have no impact. | The service will have no impact. | The container will persist in the firewall, but will have no operative effect. The container will also persist reboots and will reactivate much faster because the container is not removed. The Edge Computing service will show as Not installed:

The |

CGF Service is Active | The Edge Computing service will show as active:

However, due to the missing configuration, the service will have no impact! | The Edge Computing service will show as active:

The container is stopped, the container image is removed and will be reloaded with the next activation of the container. The service and the container will have no impact. | The Edge Computing service will show as active:

The container image is loaded, and the container is active. |

Configuring Multiple Containers

You can configure any number of additional Edge Computing services.

For more information, see How to Alternate Between Multiple Configured Edge Computing Services.

Stopping a Container

You can stop a container in three ways:

Disable the Container on the level of its configuration:

Go to CONFIGURATION > Configuration Tree > Config Tree > Box > Services > Edge Computing > Edge Computing Configuration, section Edge Computing Operation Settings, and set Enable Edge Computing to No.

Disable CGF service service that wraps the container:

Go to CONFIGURATION > Configuration Tree > Config Tree > Box > Services > Edge Computing > Service Properties, section Service Definitions, and set Enable Service to No.

Stop the container by managing the service’s state at different location in the UI (Start, Stop, Block).

For more information, see Services Page | Control of Services.

Log Messages of the Container

Edge Computing outputs messages to the logs located at LOGS > your box > CSC > Assigned Services > Edge Computing:

activate – Contains all messages that relate to activating/deactivating the service.

cleanup – Contains messages related to clean ups of the Edge Computing user home directory.

daemon – Contains messages that the Edge Computing daemon outputs during operation.

container – Contains messages from podman and the container application.

Examples for log output:

Logfile | Log Content | Note |

|---|---|---|

Box > Assigned Services > Edge Computing > activate |

| The disk quota shows that the amount of reserved disk space for the container has been configured to be ~5%. |

Box > Assigned Services > Edge Computing > daemon |

| The log output shows that the container was running 6 minutes and has been removed after it has been disabled in the configuration. |